Alexandria Brasili, Sue Allen, Margaret Foster

June 2017

For more information:

Email research@mmsa.org

Or visit www.mmsa.org

Background

Beginning in 2014, the Maine Mathematics has received a series of grants from the Noyce Foundation, STEMNext, the Davis Family Foundation, and the NSF AISL program, to create a coaching system for supporting out-of-school (OST) educators such as afterschool providers who facilitate STEM activities in their programs. Implementation partners for the work include the University of Maine, Office of Extension (4-H), the National Afterschool Association, and Vermont Afterschool. While this work is ongoing (www.mmsa.org/acres), we present here an evaluation report for the first phase.

Introduction

The Afterschool Alliance (2014) reports that afterschool programs are growing rapidly, serving over 10 million children in the U.S. annually. Of these, an estimated 69% offer some kind of STEM activities (Afterschool Alliance, 2015). Despite this growing need for STEM programming, many afterschool educators have little or no background in STEM education, and often receive little or no professional development. The ACRES project is a response to that national need.

Outline of the ACRES coaching program

The ACRES (Afterschool Coaching for Rural Educators in STEM) project provides high-quality STEM coaching for small groups of out-of-school educators, particularly afterschool providers. Participants in this professional development opportunity learn a skill during a workshop, videotape their own work with youth in their individual settings, and reflect on their teaching practice by watching and discussing their videos with other participants in their cohort and their ACRES coach.

ACRES training can have one of three formats: in-person, virtual, and blended. The in-person model allows participants to gather together at a physical site to complete the training. However, to accommodate the distance between educators in rural settings, fully virtual or blended in-person/virtual models are also available which utilize videoconferencing to bring educators together.

The full skill-based curriculum in ACRES is composed of six modules:

- Asking Purposeful Questions

- Modeling the Engineering and Design Process

- Modeling the Science Process

- Giving Youth Control

- Developing Science and Engineering Identity

- Making Authentic Assessments of STEM Learning

However, not all participants take all the modules, which are offered singly or in clusters, depending on the timing and needs of the particular educators. Each module takes approximately 6-10 hours each, in order to go beyond the “drive-by” forms of professional development and instead give the educations the opportunity to learn a skill, see it in action, try it themselves, and come back as a group to discuss how they are incorporating it into their interactions with youth.

While the ultimate goal of the ACRES project is to train coaches in existing out-of-school programs and networks, for this early phase of the project all of the coaches were MMSA staff and our implementation partners at the University of Maine, Office of Extension (4-H). All had extensive expertise in facilitating STEM learning by youth, as well as in providing PD for other educators.

Study 1: Impacts on Participating OST Educators

We conducted two evaluation studies for the early phase of the ACRES work. The first study, reported here, describes the impacts on the participating educators. A companion study, Study 2, summarizes the reflections of the coaches who implemented these coaching sessions.

Sample Selection

For this study, we analyzed and aggregated the results of post-course interviews and surveys that were conducted with every willing participant from eleven ACRES cohorts between 2014-2017. The eleven cohorts included four fully virtual cohorts and seven blended cohorts. Group sizes varied from 2 to 8 educators, plus the coach. In the blended cohorts, participants met face-to-face as a group with the coach for initial workshops where each skill was introduced, and then virtually for the remaining coaching sessions where videos were shared and discussed. Five of the cohorts were conducted with 4-H staff and volunteers; five were conducted with staff from 21st Century Community Learning Centers; and one cohort consisted of afterschool educators from Vermont. Other than the cohort from Vermont, the rest of the cohorts were based in Maine. The ACRES team partnered with 4-H extension professors from the University of Maine to recruit 4-H participants and pilot the ACRES modules. The ACRES team also partnered with Vermont Afterschool to recruit and train participants in the Vermont cohort.

Responses from a total of 51 participants who finished the ACRES course were coded and analyzed. The cohorts began with 59 total participants, with 8 participants dropping out by the end of the course (14% attrition rate).

Interview and Survey Questions and Process

The interview process varied somewhat by cohort, reflecting a gradual shift from the early prototyping phase of the project (which emphasized formative evaluation for purposes of improving the program) toward a more stable program ready for summative evaluation. Specifically, earlier cohorts were asked more semi-structured and open questions, while later cohorts were asked a standardized set of questions that were refinements of the original set, usually to increase focus and clarity. Despite this shift in question type and phrasing, the heart of the questions remained relatively unchanged for a core set of constructs, allowing us to aggregate responses from all the cohorts to give an overall sense of program impact.

Appendix A provides the complete set of all the questions that were used, with the total number of responses for each.

In addition to the interviews, participants were also asked to rate their confidence in several skills in a pre-interview or survey before taking the ACRES course as well as after in a post-interview or survey. The rating scale ranged from 1 (not confident at all) to 5 (extremely confident). In the earliest cohorts, these questions were included as part of the interview. In the three latest cohorts, these questions were asked on a SurveyMonkey survey.

Analysis

Interview and survey responses for individuals in each cohort were entered into an Excel spreadsheet and coded for common core themes. Because of the slight evolution of the interview instrument between cohorts, some cohorts were not asked particular questions. For this reason, in giving the results we have explicitly included the number of respondents to each question.

In some cases, the percentages of different responses to a given question add to more than 100%. This occurred when participants gave multiple responses to the same question (i.e. when asked to identify strengths of the program, they mentioned more than one strength). The results section provides the question asked as well as the number of responses, for clarity.

To complement the quantitative summary of how respondents answered each question, we provide direct quotes from a sample of participants. These have been lightly edited for clarity.

Finally, we share quantitative data using tables to show the pre- and post- confidence ratings of participants on a number of skills the program tried to address.

Detailed Results: Interviews

Interview responses about the ACRES course fell into several major themes, and the structure of this report follows those themes. These include:

- Overall satisfaction

- Skill development and benefits to practice

- Coaching and facilitation of the course

- Group dynamics

- Technology

- Course logistics

- Suggestions for improvements and next steps

1) Overall Satisfaction

Overall, the ACRES project was extremely well-liked by participants, evidenced by the following results:

96% of participants would recommend the course to someone else without any reservations and 93% of participants were interested in taking another course in the same format (N=45).

– “I would recommend it, if nothing else, no matter how long you’ve been doing this, I’ve been doing this for 42 years, if I can learn something out of it, I’m quite sure a lot of other people would.”

– “Oh I would definitely recommend it. Yes absolutely. One of my close colleagues got to see what we were doing and she wished that she had signed up. I would do it again too. I really truly feel I got a free, mini-graduate class.”

When asked to compare ACRES training to other professional development (N=13), several participants noted how the format of the training encouraged them to “follow through” and take action on their learning in ways that they did not experience with other professional development training (31%). Other participants enjoyed the science-focus (15%), the virtual nature of the training (23%), the length of the training (8%), and the hands-on quality (8%) that wasn’t present in other trainings.

– “I feel more invested than just a day or two training. It’s given us enough time to take the concepts, talk about them, interpret them. I got some good verbal feedback, and handouts to guide me. And since it was more long-term it definitely helped me get confident in science.”

– “I thought this experience was a lot more productive. A lot of my PD is through [school district]– they front load you on content and strategy but not a lot of time to practice, reflect and get feedback. I thought this was a lot more helpful and impactful to my teaching practice. We get half-days once a month where we learn about something, but it doesn’t stick because we don’t practice it or talk about it again. So, this was different because we did get to practice and be reflective. I thought it was top-notch compared to other PD.”

2) Skill Development and Benefits to Practice

Of the 45 participants who responded to questions about the program’s strengths, 18% identified learning specific STEM-based skills as important to them and 51% said a strength was learning general skills during the ACRES course that transcend discipline, such as Asking Purposeful Questions.

– “The training in this course expanded my thinking about STEM as far as careers and how kids can connect thinking about their future.”

– “I really enjoyed it. Very valuable to me – I learned so many tips and tricks about working with kids, and I’ve never even run a science program before, and I’m in the process of establishing a STEM club, and so this has been the catalyst and has given me the confidence to do that.”

– “The biggest take-away I got was learning some new skills for teaching, so not just the feedback of our own but the classroom time of learning a new skillset.”

– “I’m trying to ask more open ended questions over everything, not just work related, or during an education room class, but just overall, when they get off the bus, not just asking how was your day, but trying to delve in deeper.”

– “I find that I let the kids answer the questions themselves. I don’t prompt anymore. I wait and ask them questions that make them think and solve on their own. It has definitely been a learning curve for me for sure. I guess the Purposeful Questions – I have expanded that to more than just STEM activities. I use it when kids are writing. I use that when kids are doing math or social studies projects. They have to explain to me so they are getting the info embedded.”

– “Nice to take fresh look at my teaching practice. Helped me to reevaluate what I am doing and check in with myself and be more reflective about what I really want the kids to know, be able to do and how to really activate their thinking rather than getting them to answer a question of mine.”

Another strength identified was the resources that were provided (HowtoSmile and Click2Science) that helped to improve the participants’ teaching practice.

– “[The course] will definitely help with planning different activities with kids in afterschool programs. The strengths were all of the resources: the different websites, searching for different age ranges, etc.”

– “I never considered myself a science whiz , and didn’t know if the kids would be interested, but when I did the HowtoSmile experiments and the water filter, the kids received it well and wanted to do it on a weekly basis.”

– “Resources were a strength– especially Click and HowtoSmile and “Just ask” – but HowtoSmile was especially good because of the whole section on 21st century experiments and the repertoire of varied STEM subjects. I like way it was structured. I’m not sure I would have found either on my own without this project.”

When asked about their work with youth, 89% of participants (N=44) felt that their work with youth has changed after taking the ACRES training. Changes reflected the values and skills taught in the ACRES training and included asking more Purposeful Questions (64%), including more student control and ownership in their programming (28%), and promoting STEM identity in students (5%). Other changes made included allowing more pauses for student responses after asking a question (10%) and incorporating activities from resources presented in ACRES (13%). Over half (51%) of participants felt that the ACRES training promoted more self-awareness and changes in mindset about working with youth.

– “I’ve learned to focus more on the process. Having worked with school kids for the past couple of months, they don’t really get the time to tinker and play while they’re doing that, it’s kind of like, this is the right way, this is the wrong way. So, to completely shift that was a struggle, but I found myself more willing to play and to let them learn and explain their processes and see what happens, and give them that extra inch, which I had a really hard time with earlier on, so I think it’s helped in that regard.”

– “I have more patience. It’s less awkward to watch kids be frustrated and struggle through things. I can allow it to happen, instead of wanting to fix it for them. It can be uncomfortable to ask a question and sit back and watch them formulate their answer, but it’s perfectly fine to do that. The process is more important than the final product. And since I don’t work with kids often, the tools I can put in my toolbox are greatly valuable.”

– “Purposeful Questions have been so powerful and impactful for me and the way I teach in my classroom. ACRES is one of the few courses I’ve had that when it is over I am still using what I learned. It has made me a better teacher and made my students better learners.”

– “Yes, it definitely gives me a new standard to live up to with the children, my coworkers, and my boss. It helped me to approach lesson planning in a whole new way and to focus on letting the kids take charge.”

– “Yes. I definitely am using more of the purposeful questions with my students. I’m really implementing it into every activity we are doing to get them critically thinking that way. Also in terms of sharing and explaining findings – whenever we are looking at data I feel I have new questions and tools to pull from so I am eliciting more from my students rather than telling them this is what I see. It has really shifted from “me being the teacher“ to focusing on what the kids are thinking and giving them an opportunity to explain it. It’s been great.”

3) Coaching and Facilitation of the Course

Participants identified receiving feedback from others as being a strength of the course (33%, N=45), a key component of the coaching structure and facilitation of ACRES training. The expertise of the coach was specifically mentioned by 11% of participants.

– “Anytime you can get feedback, because a lot of times after you graduate from school, there isn’t anyone to give you feedback, it makes you more mindful when you’re practicing new outcomes. I believe it makes me a better presenter or educator, I like to see what other people are doing too. I lived through an era with No Child Left Behind, someone could come in and evaluate you, tape you, and whether you would be rehired would be based on that. That’s how it was in the public school system that I was in – very stressful. I wasn’t getting any good feedback out of it, whereas with this it was completely open; folks giving good feedback without feeling like my job is at risk.”

– “[Coach] was amazing. I got excellent feedback from her. It was nice to take fresh look at my teaching practice. It helped me to reevaluate what I am doing and check in with myself and be more reflective about what I really want the kids to know, be able to do, and how to really activate their thinking rather than getting them to answer a question of mine.”

A small subset of participants (N=13), were directly asked how they had benefited from the ACRES coach’s experience. 62% reported that the coach gave good feedback, 46% said the coach made participants feel comfortable, and 38% mentioned the facilitation skills of the coach. Recommendations for improvements for coaching included providing more detailed feedback (1 individual) and being more prepared (1 individual).

– “She was a good coach, good facilitator for keeping us on track with our things. I’d like to see perhaps more detailed feedback or a bit more suggestions for improvement from her specifically, as the coach.”

– “I just liked how she facilitated the group. Because I noticed her technique that she never dismissed something, she acknowledged and made it sound valued. She knew when to bring the group back, or how to keep it going if it was dead air. She was great. I think she made everyone feel safe. I felt very, very safe.”

– “Sometimes [Coach] wasn’t as clear as she could have been when it came to the training. She tried to be very influential. She didn’t let us do our own thing, she wanted it done the way she wanted it done. She tried to put her own flair on what we were doing with the kids.”

An important balance in ACRES training is to keep a safe space and a comfortable learning environment, especially while participants are sharing videos of their own teaching practice, while also stretching participants to grow as educators and learn new things. When asked about the balance of “safe vs. stretch,” 60% of participants (N=40) felt that the balance between safety and stretch was “just right” while 30% felt there was too little stretch. Only one person indicated that the course stretched them too much.

– “I think it was maybe a little weighted toward the positive. People were really trying to be positive and see what was done correctly or had improved. As opposed to “you might have tried…” there wasn’t much of that.”

– “It wasn’t really a stretch for us, as soon as we got the assignment we were able to just zoom through it. If there could be different difficulty levels within the program, that would be very interesting to utilize.”

– “In my work I’m so busy, so it was just right. But if I wasn’t actually working and doing other things it probably would have been a stretch too little.”

– “I was comfortable. It made me think of things differently, which is what I wanted, so it provoked me the right amount.”

4) Group Dynamics

When participants were asked to describe whether they benefitted from participating with others in the ACRES course, 100% responded that they did benefit (N=36). Those benefits included receiving feedback from others (53%), seeing others working in a similar setting (42%), the camaraderie of working together in a group (28%), and sharing struggles together (14%).

– -“I feel like it’s beneficial to hear from others. Teachers don’t really talk to each other about what they’re doing right or wrong, not a lot of feedback goes into helping you be a better teacher, and that’s what I think was refreshing about this.”

– “I felt that I got to see other ways of doing things that I wouldn’t have thought of to begin with.”

– “I liked seeing the ordinary settings, because I did notice on the site a lot of them were teachers or people used to being in that setting, or being filmed, and everything looked so perfect. So, it was great to see videos of pulling science into ordinary situations.”

– “Even critical feedback was presented in a positive, constructive way. It never felt uncomfortable. And it is always good to have other teachers’ perspectives. Especially because I am an elementary teacher. It was interesting to get perspective from other teachers from middle school or high school.”

– “I benefitted from seeing how other people handled programs or obstacles that you sometimes come across when you’re running the program. When you have the children there sometimes things go wrong and to see how others handled it was a big plus.”

– “I really liked the setup; the women were amazing to talk to and bounce ideas off. The strengths were communication and connection between other teachers. It was cool to connect my experiences to other teachers around the state. I could share my pitfalls of after school work. It can be harder if you’re not with these students every day, and it can be different kids every week.”

Some participants (42%) also mentioned limitations or challenges in working with the other providers. Some participants wanted to have more critique for their videos (11%), while others described the difficulty in critiquing others (6%). Other participants found it difficult to connect with others who instruct different age groups and the challenge of having disengaged participants in their group.

– “Some people gave valuable feedback and some other feedback wasn’t as relevant. I think some was pretty vague – like “nice the way you used that question” or “nice that you talked to kids, they seemed to enjoy it” – that’s nice to hear, but some of it was vague and regular.”

– “It was hard at first to really find stuff in [the videos] that was an opportunity for improvement and presenting it to person is hard to do as well.”

– “I liked the different perspectives but to get the most out of it – if all were teaching the same grade level I was I could have pulled more from what I saw from them. It would have enriched the program that much more if we were all working with same age level.”

– “I think it would’ve been nicer to have more people participate. People weren’t always ready for the meetings when we had them.”

A subset of participants (N=18) were asked about their loyalty to the rest of the group and 94% responded that they felt a high level of loyalty to the rest of their group while participating in ACRES.

– “I wanted to learn, like I didn’t want to miss anything that they talked about that might be brought up again the following week, and I really wanted to see how they’ve been improving, and the work that they’ve been doing, because I think that the coolest part about this is we do get to hear from people who are doing similar work and gain different perspectives. I wanted to see their videos, I wanted to hear their feedback, and I just wanted to interact with people who I knew were doing the same stuff as me.”

– “I like the accountability to the greater group. I feel like since I was committed and depended on their feedback, that I wanted to be there to share the same for them.”

5) Technology

The use of technology (videoconferencing; video capture, editing, and uploading; etc.) is a key component of ACRES that allows rural educators to come together in a way that doesn’t require them to be physically present. When technology was working properly, it was identified as a strength of the ACRES training. However, technological issues that occurred were also the most commonly described challenge or obstacle in the course.

When asked about the strengths of the ACRES course, 27% of participants (N=45) said the technology used in the course was a strength for connecting educators together, especially educators in rural areas. Participants valued being able to watch themselves on video, reflect on how to improve their teaching practice, and observe how peers were implementing the skills learned.

– “The greatest strength of the ACRES course was being able to share videos and receive input from peers. Being able to see how others implemented what we were learning, and how kids responded, was an invaluable learning tool.”

– “I liked the course and I liked the Zoom participation, it made it really easy and I liked that we could connect with people all around the state.”

– “I would say that being able to watch the videos we did and being able to pick at what we had done wrong or what we might have done differently was a strength. When you look at yourself, criticize yourself, you see what you could have done differently.”

However, the most common weakness identified by participants was the technological issues that occurred (39%, N=33). Participants had issues with uploading videos and personal Internet service in some rural areas of the state being inadequate and interrupting the flow of discussions. One individual didn’t feel comfortable using Zoom from home.

– “Some people didn’t have the strongest Wi-Fi, which did make it difficult for them. So, if someone is gone we could keep going, but then they get back on and you catch them up. You might be mid-sentence or mid-thought and someone disappears. It takes time and disrupts the flow. I know sometimes because of Internet speed, the language of videos would get garbled.”

– “There’s a big learning curve on Zoom. Some had technical glitches depending on their operating system. People whose turn it was to share didn’t always have it uploaded or stream-able so that we could hear the video. When you’re in a project focused entirely on observing each other, if you can’t hear the audio, it makes it very hard to do. Sometimes we couldn’t even hear the adults, or the kids.”

– “One obstacle was uploading the videos. If I’d hadn’t had the assistance of a very experienced technology person, in the form of a father of the youth I was filming, I would have been completely lost.”

Use of technology was important for many of the ACRES activities and group discussion. A small subset of participants was asked specifically about some of these activities. When asked about the importance of discussing videos of their own teaching practice with the group, 90% of participants (N=10) felt that discussion of their own videos was important. When asked about the importance of watching and discussing the Click2Science videos, professional development videos for educators working in out-of-school time STEM programs, 73% of participants (N=11) felt that it was important to have the Click videos to help visualize and model what some of the practices looked like. However, 27% felt that the Click videos were too polished and unrealistic.

– “I think it is very important. We want to grow in our teaching. It’s one thing to watch a video of someone else but we as teachers have our own styles and personalities, so to be able to get feedback on my own specific teaching and how I can apply the skills to what I am doing is THE MOST valuable thing. Watching someone else only would not have given me the same learning experience.”

– “I do think those are important – nice to see a model, especially for purposeful questions – I can assume I can understand what that means, but so nice to see that in action.”

– “They all looked quite polished and the kids were in a formal school setting, or afterschool setting. I know part of it is setting up these spaces, but many of us don’t have access to that. You want to do as much as you can, but don’t want us to feel: I don’t HAVE a classroom, I don’t HAVE a gym, I don’t HAVE that teaching experience.”

6) Course Logistics

The timing and scheduling of the ACRES course was a challenge for some participants (26%, N=35). Some participants wished the training had occurred earlier in the year, wished it were condensed into a shorter time period, or felt it was a little dragged out. For some participants, it was difficult to predict their schedules in advance.

– “I think there was too much time in between, so I would lose my momentum or forget what we were learning.”

– “The only thing was the time span between when we did the modules and when we were able to do the project with the kids.”

– “It felt a little dragged out. I thought it would be a little bit more compact. Didn’t think it would take this long. Could have happened more quickly.”

– “When we got the calendar, because it was spread out for a long period of time, so it made me feel like, it made it harder to schedule. I put it off longer than I should have.”

– “It was the wrong time of year, that’s all. I wish it had been earlier in the year.”

– “Predicting my schedule for the number of weeks we were doing it was an obstacle. It was a bit overwhelming, the number of weeks, from February through June. Making sure I had my Thursday mornings cleared, and that’s another reason I didn’t sign up for the next round. I was uneasy that I could make the commitment.”

When a small subset of participants (N=12) was asked about the length of the course, 75% of participants felt they had enough time to complete everything in the course, while 25% felt the course was a bit rushed.

– “The timing was great. I do think having the last meeting if people have time, having a get-together at the end would be beneficial, but realistically I don’t know about people’s time. Just to have a conclusion face to face.”

– “I think it was rushed slightly. Another time or two would have been nice. I did like the personal contact we had in the classroom, though I understand it wasn’t always practical, but I would have liked us to meet one more time.”

For some of the participants (15%, N=33), some of the activities were not appropriate or didn’t connect with their programming. Two people specifically mentioned the turbidity lesson as being problematic.

– “The last one, the turbidity, there were so many different variables, it was a little tough to pull off. I guess I think sometimes the kids need a more specific objective. The turbidity one was too wide-open.”

– “I think one part that was difficult was at times was I had to shift gears in curriculum I was doing with my students – a completely different lesson – in a way that broke up my teaching a bit.–If there is more support to figure out how to take skill you are learning to figure out how to apply to what you are teaching now so I don’t have to do something totally different.”

– “I think that the course should be more aimed toward the kids that we work with…like there was one part where we could pick the activity. That’s better because it’s focusing on what you know about the kids you’re working with and what they’re interested in.”

7) Suggestions for Improvement and Next Steps

Participants in ACRES had several recommendations for how the course could be improved including:

a. Working out the issues with technology

– “I think I’d survey the program directors to see if they can do that face-to-face with a technology workshop, so they can be ready to go with Zoom.”

– “I think clarifying that you do need to have more high-speed Internet just because that would help the overall flow.”

b. Sending out materials for the activities ahead of time

– “The first time we did the experiment, some people couldn’t get their hands on the materials right away, so maybe sending the materials with the welcome packet.”

c. Making sure all participants are aware of expectations for participation

– “Going back to weakness of not everyone being prepared, having the expectation that you need to come prepared so you’re not wasting people’s time. I think that could be an improvement is increasing that expectation.”

d. Having cohorts of participants who teach in a similar age range

– “If I was a grade school teacher I would have gotten more about what other grade school teachers did and vice versa. Doing it by grade levels or MS/HS and elementary school would be better. Everyone is at different levels and have different challenges.”

e. Extending the program longer

– “I like the idea of doing the program longer term. It’s a lot of work. But just to do two videos and have that feedback was great. But to keep it up over 6 months would be really cool. Especially for teachers or afterschool providers who are just starting. It would be valuable to extend it and do more of the STEM teaching skills.”

f. Incorporating other subjects (math and art)

– “I feel like we did a lot with science and engineering. If we could tuck in a little more math. I know this is STEM training, not STEAM training, but finding a way to have STEM, but also artsy. We have so many kids who like art.”

g. Having a variety of activities for participants to choose from

– “I think it would have been better going onto that website and finding activities that suit your kids, rather than teaching an activity that they aren’t interested in.”

When thinking about future recruitment for ACRES courses, participants (N=41) had many ideas about what populations might benefit from this training:

– 51% of participants thought the ACRES course would be beneficial for anyone working with youth or in education.

– “Everybody. People with shyness or low self-esteem. They might be able to open up more, or say hey I’m not as bad as I thought I was.”

– “Anyone who works in a hands-on way with youth. I work with youth both during the school day and in afterschool programming. The purposeful questions have been useful in both settings and have better prepared me to work with youth in the 4-H Experiential Learning Model.”

– “This course is well suited for anyone that works with youth in a group setting. While the topics covered are geared towards STEM activities, I found the information useful for other activities that I do with youth. I lead a toddler story-time, and found that the techniques learned in ACRES are useful to me, even though storytime is literacy focused, and I don’t often include STEM.”

– 27% of participants thought it would be helpful for a new educator or teacher to gain experience working with youth.

– “New teachers like myself – afterschool teachers obviously. For me, as a new teacher, although I’ve been a teaching Ed Tech for 4 years, it was good to be more reflective again.”

– “Those new to working with kids. I think it would help someone who felt not confident about working with kids feel more confident.”

– 22% thought the ACRES training would be beneficial for classroom teachers.

– “I think us older teachers. It’s been awhile since we took practical courses. Sometimes we get stuck in our ways.”

– 10% thought that it would help someone improve their confidence instructing science.

– “A person who lacks confidence to do science experiments with kids but has the interest. Someone who’s worked with kids for a while, but hasn’t done science necessarily.”

– Other populations that participants thought would benefit from this course included summer camp counselors, homeschoolers, and site directors that oversee educational programs and volunteers.

Participants suggested a range of skills to focus on for additional ACRES courses including:

- Student and behavior management

- Facilitating student discussion and reflectio

- Instructional techniques for engaging students

- Instruction on technology

- How to choose curriculum

- How to encourage STEM identities in students

- Facilitating the science process

- Earth science

- Engineerin

- Mat

- Literacy

- Continuing to build on existing ACRES courses

Detailed Results: Confidence Ratings

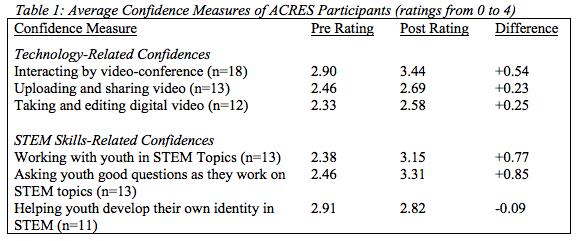

Participants in the later cohorts were asked to rate their confidence in particular skills before and after taking the ACRES course, using a 5-point scale. The following table (Table 1) shows average pre- and post- ratings for each skill, with 0 corresponding to “not confident at all” and 4 corresponding to “extremely confident.”

Although the sample sizes are too small to warrant any statistical conclusions, the table depicts promising emerging trends in the data: All of the ratings of skills in working with video technology showed increases in confidence, and two of the three target skills related to STEM facilitation also showed increases in confidence. We plan to continue collecting data as the project moves forward, so that statistical analyses will be possible in the future.

Conclusions

While the numbers of participants in the study was too low for statistical analyses, especially in the quantitative measures that were introduced for later cohorts only, we nevertheless make the following inferences:

- The program enjoys very high levels of satisfaction from participating educators.

- The program shows promise in increasing the confidence of participating educators, in skills related to both the course content (STEM facilitation) and the technological skills required to participate (video-conferencing and creating video clips).

- While this study did not attempt to assess actual changes in participants’ STEM facilitation skills, it is promising that the vast majority (almost 90%) believed the program had changed the way they work with youth.

All of the participants’ suggestions for improvements have been passed on to the program development team, where several have already been adopted.

References

Afterschool Alliance. (2014). America After 3PM Afterschool Programs in Demand. Retrieved from http://www.afterschoolalliance.org/documents/aa3pm-2014/aa3pm_national_report.pdf

Afterschool Alliance. (2015). America After 3PM Topline Questionnaire. Retrieved from

http://www.afterschoolalliance.org/documents/AA3PM 2015/AA3PM_Topline_Questionnaire.06.09.15.pdf

Appendix A: Interview and Survey Questions

Interview Questions

1. How was the course for you overall? What were its strengths? Weaknesses? (N=36)

2. What were the greatest obstacles? How did you overcome them? (N=36)

3. Do you feel you benefited from the coach’s experience? (N=13)

4. Do you feel you benefited from the sharing of other providers/frontline staff in your group? Any limitations or challenges? (N=36)

5. How does this experience compare so far with other PD experiences you’ve had (if any)? (N=13)

6. How important was it to have discussion of your own videos? (N=10)

7. How important was it to see the CLICK videos? (N=11)

8. Has there been enough time for everything or has anything felt rushed? (N=12)

9. Overall, could the course be shorter? How? (N=34)

10. Do you think your work with youth has changed since our first interview? How? (N=44)

11. How could the program be improved? (N=36)

12. It is a bit difficult for the coach because they have to keep the safe space so nobody feels embarrassed, but they also want to stretch people to learn new things. How was that balance for you- would you have preferred a bit more stretch? Or a bit less stretch? Or was it about right? (N=40)

13. Can you imagine recommending this course to someone else, or would you have reservations? (N=45)

14. What kind of person do you think would benefit most from it? (N=41)

15. If there was another course in this same format, would you be interested in participating (N=45)

16. What do you think would be a good “next skill” to focus on? (N=44)

17. How much did you feel loyal to the group that you wanted to keep showing up and doing the work because you didn’t want to let them down? Not at all, somewhat, or very much? (N=18)

Confidence Questions Asked on Pre- and Post- Interview or Survey

1. How confident do you feel about live-videoconferencing to see and hear your colleagues? Rate from 1 (not confident at all) to 5 (extremely confident). (N=18)

2. How confident do you feel about working with youth in STEM topics? Rate from 1 (not confident at all) to 5 (extremely confident). (N=13)

3. How confident do you feel about your ability to take digital videos and edit them? Rate from 1 (not confident at all) to 5 (extremely confident). (N=12)

4. How confident do you feel about your ability to upload videos and share them with others? Rate from 1 (not confident at all) to 5 (extremely confident). (N=13)

5. How confident do you feel about your ability to give others constructive feedback on their work with youth? Rate from 1 (not confident at all) to 5 (extremely confident). (N=20)

6. How confident do you feel about your ability to ask youth good questions as they work on STEM activities? Rate from 1 (not confident at all) to 5 (extremely confident). (N=13)

7. How confident do you feel about your ability to help youth develop their own identity as someone who can contribute to science, technology, engineering, or math? Rate from 1 (not confident at all) to 5 (extremely confident). (N=11)

___